Variscite marks 20 years of innovation

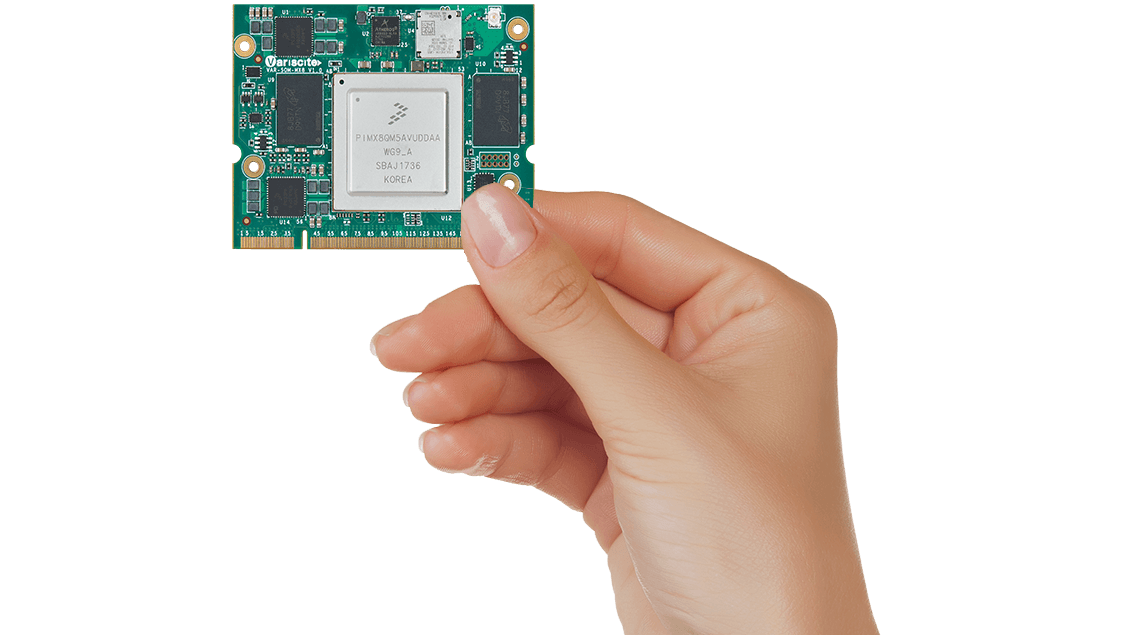

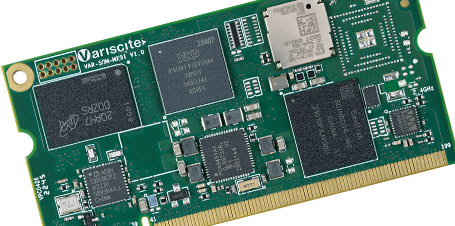

Variscite, Your Trusted ARM System On Module (SoM) Partner

Always to the highest standard

What are we up to?

New Release: Android 14.0.0_1.0.0 v1.0 for VAR-SOM-MX8M-NANO modules

Release date: 03/18/2023

Variscite is pleased to notify about the new Android 14.0.0_1.0.0 v1.0 release for the VAR-SOM-MX8M-NANO System on Module.

Modules: VAR-SOM-MX8M-NANO...

How to Mirror Displays of Differing Resolutions Using Yocto on Variscite’s i.MX8M P...

In this guest blog post from Integrated Computer Solutions (ICS), the authors delve into the intricacies of mirroring displays with varying resolutions using Yocto on Variscite’s...

Celebrating Two Decades of Pioneering the Embedded Market: A Look Back at Variscite&#...

We're thrilled to share a Variscite milestone moment as it's been an exhilarating journey of 20 years filled with innovation, growth, and leadership within the embedded industry. T...

Quality

Quality